I have grown weary of being lectured by politicians and professional health advocates about how expensive the ‘Better Access’ program has become. I am especially sick of the entire discussion being framed in terms of cost.

This critique is naïve; as any economist will tell you, the true test of value is not costs, but return on investment.

So I would like to frame this article in terms of the value of psychological services. As a profession, we need to be able to demonstrate the value of the services we provide. I have spent the last seven years in private practice working towards exactly this goal through the use of routine outcome evaluation. But first lets take a quick look at the cost side of the ledger.

Economics of Mental Health

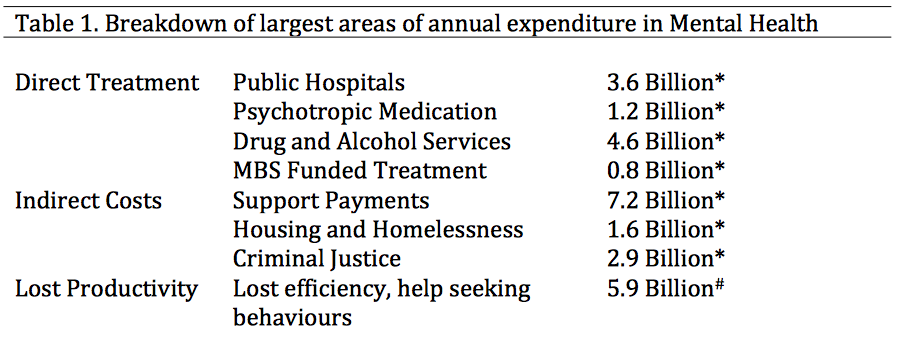

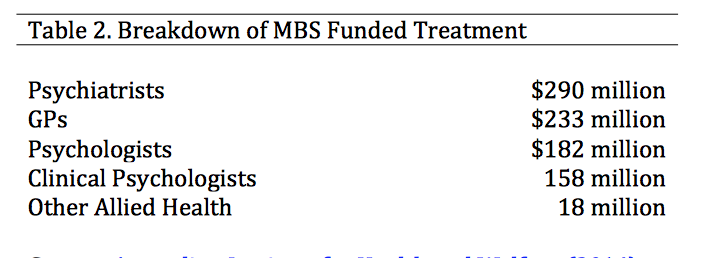

Estimates vary, but Australia spends between $7 billion and $13 billion annually on direct treatment of mental illness. On top of this, we also spend another $15 billion annually on the indirect costs. Finally, mental health costs the economy approximately $6 billion annually in lost productivity.

*Source: Medibank: The case for mental health reform in Australia (2014)

#Source: Hilton Scuffham & Whiteford (2010)

As you can see from Table 1, direct Medicare funded treatment services makes up around $800 million annually (or around 7% of the total direct costs). This is broken down further in Table 2, where you can see that GPs, Psychologists, and other Allied Health Providers (Better Access) contribute a little less than $600 million.

Source: Australian Institute for Health and Welfare (2014)

Is spending $600 million on these services a worthwhile investment of public funds?

In order to consider the return on this investment, we need to answer three important questions:

1. What is the potential amount of savings that could be achieved through treatment?

People who are treated are less likely to consume indirect services like welfare and the criminal justice system ($15 Billion). They are also likely to be more productive in their workplace ($5.9 Billion).

Potential Savings = $20.9 Billion

2. How many people are treated?

In 2009-2010 46% of those with mental health conditions accessed services. So of the costs associated with mental illness, treatment can at most hope to reduce costs in only 46% of the this population.

Potential Savings = $9.6 Billion

3. Of those who are treated, how many of them benefit from treatment?

According to a synthesis of large international meta-analyses of psychotherapy efficacy trials, approximately 70% of treated clients will experience clinically significant improvement. However, in a large study of real world treatment outcomes only 16.6% of treated clients improved. This discrepancy presents a real credibility gap for our profession, and massively limits the effectiveness of any advocacy that we can do.

Potential Savings (Upper Limit) = $6.7 Billion

Potential Savings (Lower Limit) = $1.6 Billion

Remember, these are only theoretical upper limits. It would be foolish to suggest that the true economic value is anywhere near these figures.

The point remains though, that finding $600 million worth of value in a potential $6.7 Billion dollars worth of potential savings is a lot easier than finding it within $1.6 Billion worth.

However, in the absence of reliable data from psychologists as to the effectiveness of our services, policy makers will be left to draw conclusions from the two numbers above.

How can we improve the accuracy of these estimates?

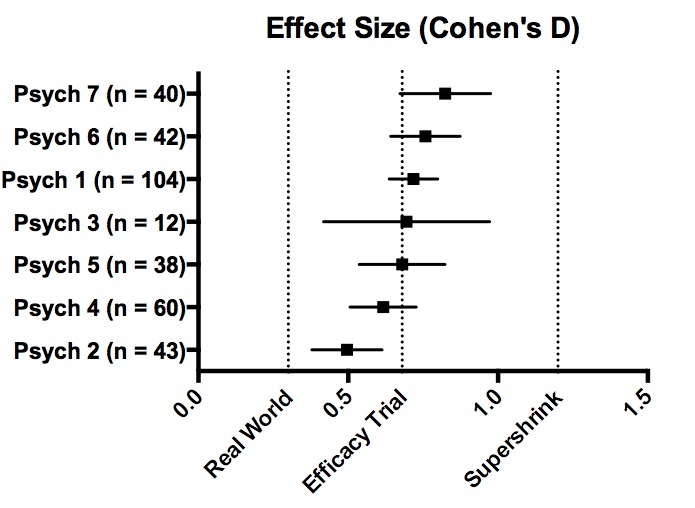

In our practice we have chosen to ensure that there is no question of how effective our services are. Informed by the pioneering work of Michael Lambert, and Scott Miller, we routinely track client outcomes using a brief symptom- monitoring tool every session. We are then able to calculate effect size as the difference in symptoms scores between the first and the final session.

Therefore I can confidently say that 67% of clients treated by our services last year experiences clinically significant gains. If our figures are representative of the entire profession, then the case for advocacy is much stronger. This type of improvement would suggest total potential savings of $4.8 Billion. If this is the actual potential pool, then treated clients need only reduce indirect costs and increased productivity by 12.5% in order for the program to break even.

An accurate estimate of psychology services effect sizes is needed. I am in the process of collating data from a number of Australian private practices to present a more comprehensive estimate of psychology effect sizes. Click here to see the results so far. If you collect outcome data, I would love to hear from you to include your overall effects in this estimate.

Benefits to clients of tracking outcomes

Besides being good in terms of accountability, routine evaluation has the added virtue of actually being good practice. There is mounting evidence that simply collecting client outcome data improves therapy effectiveness. In our practice, this has had the following effects;

- Early Identification of clients who are likely to drop out

- Detection of clients who are not benefiting from treatment

- Detection of clients who are deteriorating.

When we identify a client who is not improving, this allows the clinician to reformulate their thinking. Perhaps the diagnosis is incorrect, perhaps there is something missing in the conceptualization, perhaps the treatment of choice just doesn’t fit for this client.

Collecting client outcome data has improved the overall measured outcomes for our clients as well as reducing dropout.

Clinician Benefits

At the end of last year, we were able to produce the data in Figure 2. This graph shows the effect size of each clinician within our practice. Firstly, it is incredibly reassuring for all of us to see that we are doing ok and, for the most part, we are achieving outcomes consistent with best practice.

Figure 2. Effect size comparisons of psychologists at Benchmark Psychology (2014)

With the ever-increasing need for private, public and NGO service delivery agencies to demonstrate return on investment and accountability, these systems also form a major part of our commitment to quality assurance and professional development with our clinicians.

Enhancing Supervision

By delving a bit deeper into the data clinicians are able to identify their areas of weakness in order to improve.

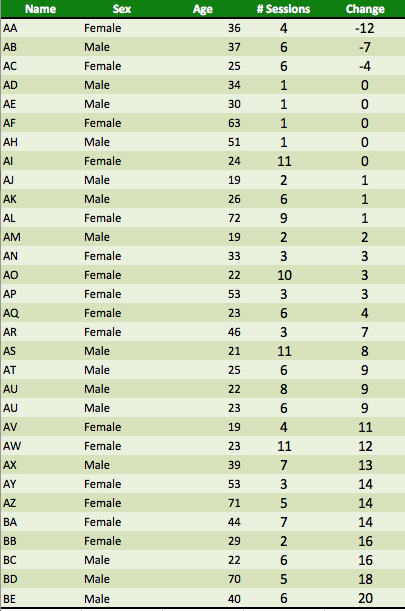

To give an example: below is the complete 2014 data for one of our clinicians arranged in order of least successful therapy to most effective (NB: negative scores indicate deterioration, positive scores indicate improvement)

Table 3. Sample data from one Clinician. NB: All data in this table is fabricated to protect confidentiality, but it mimics the pattern of data seen by one of our psychologists.

From the point of view of supervision, it is now easy to identify a number of cases that are worth auditing to see what lessons can be learned

- There are 4 single session drop-outs (why did they drop-out and what can the psychologist learn to prevent this in future

- There are 3 clients who engaged with therapy but got worse (was there any way the therapy could have predicted this earlier or intervened sooner)

- There are 5 clients who attended a few sessions, got some benefit and then discontinued (would there have been added benefit in engaging with them for longer, and how could the psychologist have done that.

Each of these case audits has the potential to teach the clinician valuable lessons about how to improve their performance in future.

Conclusion

Better Access represents a relatively large piece of government spending, and it should rightly be subject to strict evaluation of its effectiveness. I have been involved in service evaluations since 2001, and feel confident in saying that there is a direct relationship between how seriously a program is committed to outcome evaluation, and its 10-year survival rate. Fashions change, governments change and so do funding priorities. However, services that are well sandbagged by a wall of evidence are more likely to ride out these disruptions.

When we as a profession are better able to demonstrate our effectiveness, we will be less vulnerable to political caprice or expedient cost cutting. In addition to simply being accountable, tracking outcomes actually improves client outcomes as well as delivering direct benefits to both clinicians and supervisors.

I’ve tried medications in the past and found they didn’t help a ton, I didn’t like the side efeftcs, and sometimes they can backfire, making you more anxious.So I stick to natural. What works for me is:-taking a walk to clear my mind-playing scramble on facebook. I have severe ocd/paranoid social complexes and scramble really distracts my mind and demands my complete attention.-reading, games, sports, bowflex, my upright bike, being active-drinking water regularly-vitamin c and eating healthy. minimizing sugar, avoiding caffeine and soda-reminding myself it’s mind over matter. change your philosophy/what you believe. You can overcome anything and be anything. Your mind too is constantly renewing itself.

Than Aaron for actively pursuing this issue. I completely endorse your observations regarding the power of outcome data to protect profession, improve outcomes clients and improve clinical skills.

Like you I regularly use measures to track progress and it can be a sobering exercise counteracting the bias to evaluate one’s effectiveness positively on the basis of our imperfect memories of who benefited our interventions.

As the clinical lead in the Hilton, Scuffham and Whiteford study that you referenced I was acutely aware of the focus on return for investment and how this informs policy at a government level. Anecdotal evidence at this level is of no value and no consequence. It simply does not inform decision making. As a profession psychology and psychologists can seem to be remarkably naive about the process of policy making.